Wednesday, April 16, 2014

cmix version 1

I have released cmix version 1. It is now ranked first place on the Silesia Benchmark and second place on the Large Text Compression Benchmark.

Saturday, March 01, 2014

Vector Earth

I added a new HTML5 experiment to my website: Vector Earth. Inspired by the xkcd now comic, I downloaded some vector map data and tried experimenting with making some of my own map projections. The first projection I implemented was the Azimuthal equidistant projection, similar to what was used for the xkcd comic. If you are interested in map projections, Jason Davies has implemented some very cool demos.

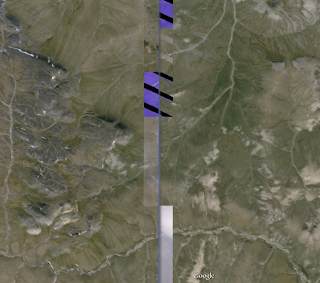

While I was implementing the map projection, I encountered a bug where there was an unnatural vertical line cutting through Siberia. I went to Google Maps to see what Siberia was supposed to look like, and was amused to find that Google Maps seemed to have the same bug!

In both the vector and satellite data, there are strange discontinuities along this line. It turns out that this line is the threshold where 180 degrees longitude meets -180 degrees.

Wednesday, February 26, 2014

xkcd now clock

I liked the clock in today's xkcd. However, it only has a 15 minute granularity. Well, this is something I can fix in HTML5 canvas! I checked to see if anyone else has done this and I think I am the first.

Saturday, February 22, 2014

Texture

Can you guess what this is?

It is a zoomed in picture of the lid to a container of yogurt I ate yesterday. It reminded me of the patterns produced by the Belousov-Zhabotinsky reaction. I have no idea why the lid has such a strange pattern on it.

It is a zoomed in picture of the lid to a container of yogurt I ate yesterday. It reminded me of the patterns produced by the Belousov-Zhabotinsky reaction. I have no idea why the lid has such a strange pattern on it.

cmix update

cmix has now surpassed paq8l on both the calgary corpus and enwik8. cmix is probably now one of the most powerful general purpose compression programs. cmix is about the same speed as paq8l, but uses much more memory (which gives it an advantage on large files such as enwik8).

cmix currently uses an ensemble of 112 independent predictors (paq8l uses 552). cmix uses a more extensive neural network to mix the models than paq8l. cmix uses a four layer network with 528,496 neurons (paq8l uses a three layer network with 3,633 neurons).

For both calgary corpus and enwik8, there is still a significant gap until cmix reaches state of the art. PAQAR and decomp8 are both specialized versions of paq8, which use dictionary preprocessing specific to the dataset (i.e. they are not very good at general purpose compression). I think my first official benchmark submission will probably be on the large text compression benchmark. cmix would currently be ranked as 4th out of 183 submissions. However, I still have a number of ideas which should make cmix significantly better, so I'll hold off on submitting until I reach first place!

cmix currently uses an ensemble of 112 independent predictors (paq8l uses 552). cmix uses a more extensive neural network to mix the models than paq8l. cmix uses a four layer network with 528,496 neurons (paq8l uses a three layer network with 3,633 neurons).

For both calgary corpus and enwik8, there is still a significant gap until cmix reaches state of the art. PAQAR and decomp8 are both specialized versions of paq8, which use dictionary preprocessing specific to the dataset (i.e. they are not very good at general purpose compression). I think my first official benchmark submission will probably be on the large text compression benchmark. cmix would currently be ranked as 4th out of 183 submissions. However, I still have a number of ideas which should make cmix significantly better, so I'll hold off on submitting until I reach first place!

Saturday, February 15, 2014

Nothing To Hide

There is a cool game being developed called Nothing To Hide. It uses the visibility polygon library that I wrote. Review at rockpapershotgun.

Monday, January 06, 2014

Avegant Glyph

A few months ago I wrote a review of the Oculus Rift. There is another similar product coming out soon which I am even more excited about. The Avegant Glyph uses a revolutionary technology called virtual retinal display (VRD). My prediction is that 50 years from now VRD will be the primary way most people interact with computers.

The first version of the Glyph will be available on Kickstarter on January 22nd for $499. I am tempted to order one. I don't like the fact that headphones/batteries are embedded in the headset, making it much bulkier than it needs to be.

The first version of the Glyph will be available on Kickstarter on January 22nd for $499. I am tempted to order one. I don't like the fact that headphones/batteries are embedded in the headset, making it much bulkier than it needs to be.

cmix

I spent most of my christmas break working on a data compression project. It is a lossless compression program called "cmix" written in C++. It is aimed entirely at optimizing compression ratio (at the cost of high CPU/memory usage). I have the very ambitious goal of eventually surpassing the compression performance of PAQ8. PAQ8 is currently state of the art in most compression benchmarks. My master's thesis was related to PAQ8. Realistically, it is unlikely that I will be able to surpass PAQ8 (given the amount of work/expertise that has gone into its development).

As I develop the program, I have been tracking my progress on some standard compression benchmarks. You can see my progress at https://sites.google.com/site/cmixbenchmark/. This tracks two compression benchmarks: enwik8 (Wikipedia) and calgary corpus (a mixture of files). The tables show the compression size of some other compression programs for reference. The time line shows the performance of cmix over time, as I add new features.

cmix currently compresses the calgary corpus to 667,558 bytes. The record is 580,170 bytes by a variant of PAQ8 called PAQAR. There have been hundreds of versions of PAQ, but here are some major milestones in its version history:

So cmix currently does slightly better than PAQ4. cmix shares a similar architecture to PAQ. It is a streaming algorithm - it compresses data by predicting the probability of each bit given the previous bits in the input. It combines the predictions of a large number of independent models into a single prediction for each bit (i.e. ensemble learning). The main developer of PAQ wrote an online book about data compression: Data Compression Explained. It is an excellent reference - I have been following it closely in the development of cmix.

As I develop the program, I have been tracking my progress on some standard compression benchmarks. You can see my progress at https://sites.google.com/site/cmixbenchmark/. This tracks two compression benchmarks: enwik8 (Wikipedia) and calgary corpus (a mixture of files). The tables show the compression size of some other compression programs for reference. The time line shows the performance of cmix over time, as I add new features.

cmix currently compresses the calgary corpus to 667,558 bytes. The record is 580,170 bytes by a variant of PAQ8 called PAQAR. There have been hundreds of versions of PAQ, but here are some major milestones in its version history:

| Version | Calgary | Date |

|---|---|---|

| P5 | 992,902 | 2000 |

| PAQ1 | 716,704 | 2002 |

| PAQ2 | 702,382 | May 2003 |

| PAQ3 | 696,616 | Sep 2003 |

| PAQ4 | 672,134 | Nov 2003 |

| PAQ5 | 661,811 | Dec 2003 |

| PAQ6 | 648,892 | Jan 2004 |

| PAQ7 | 611,684 | Dec 2005 |

| PAQ8L | 595,586 | Mar 2007 |

So cmix currently does slightly better than PAQ4. cmix shares a similar architecture to PAQ. It is a streaming algorithm - it compresses data by predicting the probability of each bit given the previous bits in the input. It combines the predictions of a large number of independent models into a single prediction for each bit (i.e. ensemble learning). The main developer of PAQ wrote an online book about data compression: Data Compression Explained. It is an excellent reference - I have been following it closely in the development of cmix.

Wednesday, November 06, 2013

Duna Landing

Today I landed on Duna (fourth planet from the Sun):

The landing was miraculous. I completely ran out of fuel before I was even in orbit around Duna. My approach velocity was way too high to get into orbit, so I used my last bit of fuel to aim my trajectory at Duna's atmosphere. Incredibly, burning through the atmosphere slowed me down enough to get into orbit. The orbit took me around into a second pass through the atmosphere. On the second pass I deployed my parachutes. Unfortunately Duna's atmosphere isn't very thick, so most of my ship was destroyed by the surface impact.

Here is the ship launching from Kerbin:

If you look closely, you can see my main thruster is a nuclear thermal rocket.

My Mun landing happened at 13 hours of gameplay and the Duna landing was at 19 hours.

The landing was miraculous. I completely ran out of fuel before I was even in orbit around Duna. My approach velocity was way too high to get into orbit, so I used my last bit of fuel to aim my trajectory at Duna's atmosphere. Incredibly, burning through the atmosphere slowed me down enough to get into orbit. The orbit took me around into a second pass through the atmosphere. On the second pass I deployed my parachutes. Unfortunately Duna's atmosphere isn't very thick, so most of my ship was destroyed by the surface impact.

Here is the ship launching from Kerbin:

If you look closely, you can see my main thruster is a nuclear thermal rocket.

My Mun landing happened at 13 hours of gameplay and the Duna landing was at 19 hours.

Tuesday, November 05, 2013

Landing on the Mun

Today I achieved the impossible: safely landing a kerbal on the Mun.

I landed on the dark side of the Mun, so it is a bit hard to see. Next time I'll bring some lights on the ship. Unfortunately the ship is completely out of fuel, so Bill will have to wait there until I send a rescue mission. Here is the ship preparing for launch from Kerbin:

Here is the support crew, orbiting the Mun:

I landed on the dark side of the Mun, so it is a bit hard to see. Next time I'll bring some lights on the ship. Unfortunately the ship is completely out of fuel, so Bill will have to wait there until I send a rescue mission. Here is the ship preparing for launch from Kerbin:

Here is the support crew, orbiting the Mun:

Wednesday, October 30, 2013

Fluid Simulation

http://www.byronknoll.com/smoke.html

http://www.byronknoll.com/smoke.htmlThis is a simulation of the Navier-Stokes equations. The implementation is based on this paper:

Stam, Jos (2003), Real-Time Fluid Dynamics for Games

Unfortunately the simulation turned out to be very slow, so my demo has a tiny grid size.

Saturday, October 26, 2013

Normal Mapping

I made a HTML5 shader using normal mapping: http://www.byronknoll.com/dragon.html.

I made this based on a demo by Jonas Wagner. I actually found some mistakes in the underlying math in Jonas Wagner's demo, which I fixed in my version.

I got the dragon model here. I rendered the dragon using Blender. Here is the texture and normal map:

I now have a gallery of the HTML5 demos I have made: http://www.byronknoll.com/html5.html

Thursday, October 17, 2013

Redesign

I just updated my homepage with a new CSS layout. Let me know if you see any issues or have suggested improvements.

Wednesday, October 09, 2013

Deformable Textures in HTML5 Canvas

Inspired by this HTML5 demo, I decided to try to add a texture to the HTML5 blob I made earlier. Here is my attempt: http://www.byronknoll.com/earth.html

Initially I was stuck because it is too slow to actually manipulate and render individual pixels to HTML5 canvas. In order to get a decent framerate you need to either use vector graphics or render chunks of a raster image using drawImage(). My breakthrough came when I read this stack overflow thread. Using the transform() method, you can perform a linear transformation on regions of a raster image. I split up the image of earth into pizza slices and mapped each slice onto the blob with the appropriate transformation (to match up with the boundary vertices of the blob). Seems to work well and even has a decent framerate on my phone.

Initially I was stuck because it is too slow to actually manipulate and render individual pixels to HTML5 canvas. In order to get a decent framerate you need to either use vector graphics or render chunks of a raster image using drawImage(). My breakthrough came when I read this stack overflow thread. Using the transform() method, you can perform a linear transformation on regions of a raster image. I split up the image of earth into pizza slices and mapped each slice onto the blob with the appropriate transformation (to match up with the boundary vertices of the blob). Seems to work well and even has a decent framerate on my phone.

Thursday, September 12, 2013

Oculus Rift Review

I have been playing with the Oculus Rift developer kit (thanks to my roommate who ordered it). The developer kit costs $300.

It was very easy to set up - all you have to do is plug in the cables and it works. The first problem I encountered was with the size of the headband. The *maximum* length setting is barely big enough for my head. It will probably be uncomfortable for anyone with a bigger head than me.

I started out by trying some demos from https://share.oculusvr.com/. The initial experience is fantastic - more immersive than any gaming experience I have tried before. The headtracking and eye focus feel very natural. It also has a great field of view. The display quality is a bit disappointing however. Due to the low resolution and magnification from the lens, you can see individual pixels and the black borders between pixels. The display also becomes quite blurry towards the edges. Text is basically unreadable unless it is large and near the center of the display. The consumer edition of the Oculus Rift will have a higher resolution display, so hopefully that improves things. The color quality and brightness of the display seem to be nice though.

The headtracking is not perfect. If you concentrate you can see the lag between your head movement and the display being updated. However, it is good enough that you don't notice it unless you are specifically looking for it.

The 3D effect works very well. Since each eye gets its own display, parallax works perfectly and your brain correctly interprets depth information. Much better than the 3D effect at a theater.

Wearing the headset is comfortable, although I started feeling a bit dizzy/nauseated after using it for ~30 minutes.

Games have to specifically add support for the Oculus Rift in order to be compatible. There are currently very few games with support (although that will probably change once the commercial product gets released). I bought Surgeon Simulator 2013. This seems to have pretty good support, although it has some text which is hard to read.

VR goggles and head-mounted displays (HMD) are definitely going to start becoming popular within the next couple years. Sony is rumored to be developing VR goggles for the PS4. Sony has already released a HMD intended for watching movies and 3D content. The cinemizer OLED does the same thing. Google Glass will be released soon (along with several direct competitors). I am more excited about these types of displays than I am about the Oculus Rift. I want a high quality HMD that can completely replace my monitor. The HMZ-T2 and Cinemizer are not good enough for this yet.

I did some research into building my own HMD. Building a clone of the Oculus Rift is apparently feasible. I don't care about having head-tracking or a high field of view. Instead I would prefer having higher display quality and less distortion around the edges (by having a lower magnification lens). This would make the display more usable for reading text and watching movies (which the Oculus Rift is terrible at). I tried removing the lenses from the Oculus Rift and looking directly at the display. Unfortunately the image is too close to focus - apparently the lens reduces your minimum focus distance. I think swapping in some lower magnification lenses could improve things.

Tuesday, September 10, 2013

Saturday, August 31, 2013

Compass Belt

Over the last week I have been assembling a compass belt. It is a belt lined with ten motors controlled by an arduino. The motor closest to north vibrates so that the person wearing the belt always knows which direction is north. I am not the first person to build a compass belt: Here is a list of all the parts I used:

- 10 motors $49.50

- Compass $34.95

- Arduino Uno $29.95

- Soldering iron $9.95

- Belt $8.99

- Soldering iron stand $5.95

- Wire $5

- Multiplexer $4.95

- Wire strippers $4.95

- USB cable $3.95

- Breadboard $3.95

- Battery holder $2.95

- Flush cutter $2.95

- Proto boards $2.50

- Solder wick $2.49

- Solder $1.95

- Headers $1.50

- transistor $0.75

- capacitor $0.25

- 1k resistor $0.25

- 33 ohm resistor $0.25

- diode $0.15

Here is a picture of the inside:

The belt seems to work well - it is quiet, accurate, and updates instantly when I turn around. I haven't actually found it useful yet - it seems pointless to wear it in places that I am already familiar with (and it looks silly). Even if I don't end up using it, it was fun to build and I learned a lot about electronics.

Tuesday, July 30, 2013

Saturday, June 08, 2013

Time Series Forecasting

Today I released an open source project for time series prediction/forecasting: https://code.google.com/p/predcast/

Wednesday, May 22, 2013

Unlabeled Object Recognition in Google+

Google+ released an amazing feature that uses object recognition to tag photos. Here is a Reddit thread discussing it. Generalized object recognition is an incredibly difficult problem - this is the first product that I have seen which supports it. This isn't a gimmick - it can recognize objects in pictures without *any* corresponding text information (such as in the filename, title, comments, etc). Here are some examples on my photos (none of these photos contain any corresponding text data to help):

At first I thought this last one was a misclassification, until I zoomed in further and saw a tiny plane:

Of course, there are also many misclassifications since this is such a hard problem:

This squirrel came up for [cat]:

This train came up for [car]:

This goat came up for [dog]:

These fireworks came up for [flower]:

This millipede came up for [snake]:

At first I thought this last one was a misclassification, until I zoomed in further and saw a tiny plane:

Of course, there are also many misclassifications since this is such a hard problem:

This squirrel came up for [cat]:

This train came up for [car]:

This goat came up for [dog]:

These fireworks came up for [flower]:

This millipede came up for [snake]:

Subscribe to:

Posts (Atom)