Monday, November 21, 2011

InnoCentive

I found a cool website called InnoCentive. They currently host 116 active competitions - some of them with million dollar prizes. Most of the contests seem to be about solving open-ended problems in science/engineering. I have made a submission to the "Strategy to Assimilate Unstructured Information" contest (which ends in four days). There is a similar website that hosts competitions for machine learning problems called Kaggle.

Facebook Removes RSS Importing

When I logged into Facebook today I was greeted with this message:

That's right, Facebook is removing the ability to import RSS feeds. I am not very active on Facebook, so people commenting on my automatically imported blog posts is one of the few ways I still use the service. Now that this functionality is removed, my usage of Facebook will drop significantly. I have the suspicion they are removing it for a bad reason. I have noticed that the amount of time it takes for RSS feed items to be imported to Facebook can sometimes take several days. Why would it be so slow? Google Reader updates my feeds in a matter of minutes. I think the only reason Facebook would be so slow at importing feeds is if it is an expensive process and they don't want to spend the resources to update more often. The feature is obviously good for users, so the only reason Facebook would have to remove it is to reduce costs.

That's right, Facebook is removing the ability to import RSS feeds. I am not very active on Facebook, so people commenting on my automatically imported blog posts is one of the few ways I still use the service. Now that this functionality is removed, my usage of Facebook will drop significantly. I have the suspicion they are removing it for a bad reason. I have noticed that the amount of time it takes for RSS feed items to be imported to Facebook can sometimes take several days. Why would it be so slow? Google Reader updates my feeds in a matter of minutes. I think the only reason Facebook would be so slow at importing feeds is if it is an expensive process and they don't want to spend the resources to update more often. The feature is obviously good for users, so the only reason Facebook would have to remove it is to reduce costs.

Sunday, November 20, 2011

e-sports

I spent the day watching the MLG Starcraft 2 championship. This is by far the most entertaining sport I have watched. I bet it would be fun to watch even for people who have never heard of Starcraft. It is definitely attracting a growing audience in the US and should start showing up on TV networks soon. I started cheering for the South Korean player Leenock while he was in the losers bracket, several rounds before the finals. I was thrilled when he continued winning round after round, making his way out of the losers bracket to eventually win the competition and a $50,000 prize. Incredibly he is only 16 years old! Before this contest almost nobody had heard of Leenock, so it was exciting to watch him defeat world-famous sc2 players one after another.

Leenock's rise to fame reminds me of another young e-sport competitor: tourist. tourist is only 17 years old but has proven himself to be one of the world's best competitive programmers. I find it shocking that tourist/Leenock can become the best at their respective fields at such a young age. It shows how genetics and innate intelligence/talent plays a crucial role in these sports, since their age obviously limits the number of years they have spent practising. I think since competitive programming is a pretty good measurement of human intelligence, tourist may also be one of the smartest people in the world. It will be interesting to keep track of what he eventually accomplishes in his career.

Leenock's rise to fame reminds me of another young e-sport competitor: tourist. tourist is only 17 years old but has proven himself to be one of the world's best competitive programmers. I find it shocking that tourist/Leenock can become the best at their respective fields at such a young age. It shows how genetics and innate intelligence/talent plays a crucial role in these sports, since their age obviously limits the number of years they have spent practising. I think since competitive programming is a pretty good measurement of human intelligence, tourist may also be one of the smartest people in the world. It will be interesting to keep track of what he eventually accomplishes in his career.

Monday, November 07, 2011

rpscontest.com is down!

A while ago I created the site rpscontest.com - a rock-paper-scissors programming competition hosted by Google App Engine. The site is currently down due to exceeding its quota. This is because today App Engine introduced a new pricing model. With the old pricing model my quota usage was $0 per day. With the new model it is about $13 per day, or about $4,750 per year. Seriously? That is a ridiculous increase in price. Of course this basically forces me to shut down the site or redesign how it works. For now I have disabled all automatic ranked matches which should bring it back into the free quota (at the same time making the site completely useless because the rankings will no longer update). I can only assume that the price increase has a larger impact on me than typical users (possibly due to the type of resources I use to run matches). The only reason I make this assumption is because if everyone is hit by the price increase as badly as me, nobody would continue using App Engine. I am not happy.

Monday, October 31, 2011

Google Reader

Today Google Reader was updated with a new UI and Google+ integration. Although the reaction on Reddit seems to be quite negative, I like the new UI. One complaint people have is that there is much more whitespace and less space for content than the older version. While this is true, it doesn't bother me because of the way I use Google Reader. I focus on one feed item at a time, using a hotkey to cycle through items. Feed items usually are a short snippet of text so don't need much space anyway.

In case anyone doesn't know what RSS feeds are or what Google Reader does, I will give a short explanation here. Many websites update a .RSS file whenever they post new content. A feed reader simply listens to a set of RSS feeds and tells you when new content is posted. Instead of manually visiting a long list of your favorite websites to find content, you can find it all organized in one place with a feed reader. RSS feeds can have other uses as well: my blog posts automatically get imported into Facebook via RSS.

I get the majority of my news/entertainment through RSS feeds, so I encourage everyone to try it out. My favorite feeds come from Reddit (and various subreddits). Webcomics are perfect for RSS feeds - some of my favorites include: xkcd, The Perry Bible Fellowship, Nedroid, Hyperbole and a Half, The Oatmeal, Penny Arcade, Buttersafe, and Abstruse Goose.

Google Reader has some interesting statistics in the "Trends" view. The graph above shows the time of day that I usually read items. Since October 7, 2005 I have read a total of 102,284 items. I can also view the number of people in Google Reader subscribed to various feeds - my blog currently has 14. Here is my blog's feed.

In case anyone doesn't know what RSS feeds are or what Google Reader does, I will give a short explanation here. Many websites update a .RSS file whenever they post new content. A feed reader simply listens to a set of RSS feeds and tells you when new content is posted. Instead of manually visiting a long list of your favorite websites to find content, you can find it all organized in one place with a feed reader. RSS feeds can have other uses as well: my blog posts automatically get imported into Facebook via RSS.

I get the majority of my news/entertainment through RSS feeds, so I encourage everyone to try it out. My favorite feeds come from Reddit (and various subreddits). Webcomics are perfect for RSS feeds - some of my favorites include: xkcd, The Perry Bible Fellowship, Nedroid, Hyperbole and a Half, The Oatmeal, Penny Arcade, Buttersafe, and Abstruse Goose.

Google Reader has some interesting statistics in the "Trends" view. The graph above shows the time of day that I usually read items. Since October 7, 2005 I have read a total of 102,284 items. I can also view the number of people in Google Reader subscribed to various feeds - my blog currently has 14. Here is my blog's feed.

Sunday, October 30, 2011

DARPA Shredder Challenge

This weekend I started working on the DARPA Shredder Challenge. This is a contest to reconstruct shredded documents. There are a total of five puzzles and a $50,000 prize. So far I have solved one puzzle using a combination of algorithms and manual assembly. The first puzzle was pretty tough, so I am worried that I won't be able to solve any of the remaining four.

Saturday, October 29, 2011

Dark Souls Defeated

I managed to beat dark souls in a total of 57 hours of play time. I made heavy use of the multiplayer components of the game - summoning other players to help me defeat powerful enemies. I suspect that without taking advantage of the online functionality this game would be significantly more difficult. Overall I enjoyed Dark Souls more than Demon's Souls. Throughout the game I was stunned by the creativity and beauty of the level design. The design team must be incredibly talented since the world they created is far more immersive than any other game I have played.

Friday, October 07, 2011

Dark Souls

Demon's Souls was one of the greatest games I have played. The game was so difficult that I considered giving up several times - but I eventually beat it. On Tuesday a sequel to Demon's Souls was released called Dark Souls. I am only about 5 hours into the game but so far it is incredible - maybe even better than the original. I have been warned by online reviews that Dark Souls is even harder than Demon's Souls, so I am hoping that I won't get stuck and miss out on experiencing the full game. It is interesting that such a frustrating/agonizing game is enjoyable at the same time - the joy of beating a monster makes up for the pain of spending hours trying and failing to beat the monster in previous attempts.

Tuesday, September 06, 2011

Fast String Matching

String matching is an important problem in several fields of computer science such as bioinformatics, data compression, and search engines. I find it amazing that search engines can find exact string matches in massive datasets in essentially constant time. Having the ability to do this would be very useful for when I create data compression algorithms!

Last night I downloaded the entire English Wikipedia, which turned out to be a 31.6GiB XML file. I spent the day trying to solve the following problem: how to quickly find strings in this massive file. The hardest constraint in solving this problem is the fact that my computer only has about 3GiB of memory. Somehow I needed to preprocess the 32GiB file into 3GiB of memory and then *only* use the 3GiB of memory to find the location of arbitrary strings in constant time. Solving this problem exactly is impossible, so the goal of the project is to maximize the likelihood that the match I find is correct.

I tried doing some research online and was surprised that I couldn't find many helpful resources. This is surprising since this seems like such an important problem. I will describe the algorithm I ended up implementing:

I stored all of the data in two arrays. The first array stored positions in the XML file, type = long, dimension = 20000000x4. The second array stored counts, type = int, dimension = 20000000. The first step was to go through the 32GiB XML file and extract every substring of length 25. These substrings are keys that I used to store in the arrays. I computed a hash for each key to use as an array index. The second array was used to keep track of the number of keys put in each array position, so it is simply incremented for each key. The first array is used to store the position of up to four substrings. I stored the position of a particular key with a probability that depends on the number of colliding keys (the count stored in the second array). More collisions = less likely to store the position.

OK, so the algorithm so far preprocessed the 32GiB file into about 700MiB of memory. Now comes the fun part of using the data to do string matching. Given a string to search for, I first extract every substring of length 25. I hash each substring and collect the locations that are stored at those positions in the first array. Only a small fraction of these matches are likely to actually correspond to the string we are searching for. The essential piece of information that helps find the true match is the fact that the offset of the substrings in our search query should match the corresponding offset of matches returned from the data. It is possible to find the best match in O(n) time (where n is the length of the search query).

To sum it up: I preprocess the data in O(m) time (where m is the length of the data). I can then do string matching in O(n) time (where n is the length of the search query).

So how well did this work on the dataset? It took something like 1 hour to preprocess the XML file (basically the amount of time required to read the entire file from disk). The search queries run remarkably fast - no noticeable delay even for queries that are hundreds of thousands of characters long. The longer the search query, the more likely the algorithm is to return the correct match location. For queries of length 50, it seems to match correctly about half of the time. For queries over length 100, it is almost always correct. Success!

Interesting fact: my algorithm is somewhat similar to how Shazam works. Shazam is a program that can look up the name of a song given a short recorded sample of it. The longer the recording, the more likely the correct match is returned.

Last night I downloaded the entire English Wikipedia, which turned out to be a 31.6GiB XML file. I spent the day trying to solve the following problem: how to quickly find strings in this massive file. The hardest constraint in solving this problem is the fact that my computer only has about 3GiB of memory. Somehow I needed to preprocess the 32GiB file into 3GiB of memory and then *only* use the 3GiB of memory to find the location of arbitrary strings in constant time. Solving this problem exactly is impossible, so the goal of the project is to maximize the likelihood that the match I find is correct.

I tried doing some research online and was surprised that I couldn't find many helpful resources. This is surprising since this seems like such an important problem. I will describe the algorithm I ended up implementing:

I stored all of the data in two arrays. The first array stored positions in the XML file, type = long, dimension = 20000000x4. The second array stored counts, type = int, dimension = 20000000. The first step was to go through the 32GiB XML file and extract every substring of length 25. These substrings are keys that I used to store in the arrays. I computed a hash for each key to use as an array index. The second array was used to keep track of the number of keys put in each array position, so it is simply incremented for each key. The first array is used to store the position of up to four substrings. I stored the position of a particular key with a probability that depends on the number of colliding keys (the count stored in the second array). More collisions = less likely to store the position.

OK, so the algorithm so far preprocessed the 32GiB file into about 700MiB of memory. Now comes the fun part of using the data to do string matching. Given a string to search for, I first extract every substring of length 25. I hash each substring and collect the locations that are stored at those positions in the first array. Only a small fraction of these matches are likely to actually correspond to the string we are searching for. The essential piece of information that helps find the true match is the fact that the offset of the substrings in our search query should match the corresponding offset of matches returned from the data. It is possible to find the best match in O(n) time (where n is the length of the search query).

To sum it up: I preprocess the data in O(m) time (where m is the length of the data). I can then do string matching in O(n) time (where n is the length of the search query).

So how well did this work on the dataset? It took something like 1 hour to preprocess the XML file (basically the amount of time required to read the entire file from disk). The search queries run remarkably fast - no noticeable delay even for queries that are hundreds of thousands of characters long. The longer the search query, the more likely the algorithm is to return the correct match location. For queries of length 50, it seems to match correctly about half of the time. For queries over length 100, it is almost always correct. Success!

Interesting fact: my algorithm is somewhat similar to how Shazam works. Shazam is a program that can look up the name of a song given a short recorded sample of it. The longer the recording, the more likely the correct match is returned.

Saturday, September 03, 2011

Gold Promotion

I recently got promoted to gold league:

Here is the army graph for a match I am proud of:

The enemy completely destroyed my army and my main base. I think they started taking it easy after my main base went down - I made a huge comeback and eventually won the game :)

Saturday, July 09, 2011

RoboCup

I have been in Istanbul, Turkey for the last week at the RoboCup robotics competition. We are competing in the small size league. Today we finished our last official match. Although the final rankings have not been released yet, we think our team placed 9th (out of 20). We ranked well in the technical challenges: 3rd place in the navigation challenge and 3rd place in the mixed team challenge.

Master's Thesis

I finished my master's thesis! The final copy is posted here. Now that my thesis is done I can start work at Google. My start date is July 18.

Friday, July 08, 2011

Crater Detection

The contest has finished and my final ranking was 6th place. One rank away from a prize! The approach I used was using template matching and the normalized cross-correlation distance metric.

Tuesday, June 28, 2011

Crater Detection

NASA is hosting a TopCoder marathon match to detect craters in satellite images. The contest lasts about two weeks and there are $10,000 in prizes (for the top five competitors). The task is to return a list of crater positions and sizes for a set of satellite images. There is a little over a day remaining in the contest and I am currently ranked third. If I end up doing well in the final rankings I will make a blog post about the technique I used. Here are a few example images in the training set:

Tuesday, June 21, 2011

Bitcoin

I recently started bitcoin mining using my GeForce GTX 260. Some of my friends have invested thousands of dollars into bitcoin mining hardware and have already earned back more than they invested. Bitcoin mining seems to be the latest fad among CS students. Unfortunately my mining rate is pitifully slow, so I gave up after a few days. I earned 0.5 bitcoins in slush's pool. I held an auction for my 0.5 BTC on IRC and got payed $5 CAD for it.

Saturday, May 28, 2011

Saturday, May 21, 2011

Website Exploit

Within a few hours of launching my Rock Paper Scissors website somebody found an exploit. The exploit caused all users to be forwarded to another site. I have now fixed and prevented this particular exploit. An exciting website debut!

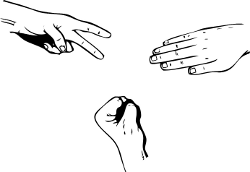

Rock Paper Scissors

Over the last two days I implemented a new website: Rock Paper Scissors Programming Contest. It is amazing how fast a relatively complex website can be created using Google App Engine. It has probably been one of the funnest programming projects I have worked on!

I think the website could potentially lead to some innovative research. All of the submissions are open-source, so if the website becomes popular I will be very interested to see how the best AIs work. It should be possible to directly convert any RPS algorithm into a compression algorithm - there should even be a correlation between RPS performance and compression performance.

I encourage anyone who is reading this to try submitting an entry. Programming a RPS AI should be pretty fun. Python is also very easy to learn, so even people who have no programming experience should give it a try!

Monday, May 16, 2011

Promote Me!

In Starcraft 2 I am currently rank 2 of bronze league. However, from what I understand the rank within a league is not very meaningful - the important number is called MMR. MMR determines league promotions and demotions. Blizzard keeps the MMR statistic hidden from players. I actually think this is a poor design decision since the benefits of releasing MMR seem to far outweigh the benefits of hiding it.

I have been practicing and optimizing a single strategy which seems to be doing pretty well. I won 17 of my last 18 matches - against bronze, silver, and gold players. The fact that I am winning against gold players should indicate that I need to be promoted from the bronze league. However, Blizzard's algorithm seems to think otherwise and wants me to stay in bronze :(

I have been practicing and optimizing a single strategy which seems to be doing pretty well. I won 17 of my last 18 matches - against bronze, silver, and gold players. The fact that I am winning against gold players should indicate that I need to be promoted from the bronze league. However, Blizzard's algorithm seems to think otherwise and wants me to stay in bronze :(

Tuesday, May 10, 2011

Starcraft II

Last week I bought Starcraft II. The game has already been out for a year, so I am late to the party. I also never played the original Starcraft (which has been out for 13 years), so you can imagine that I am terrible at the game.

I have spent a lot of time over the last week studying and practicing. I found the beginner guide at /r/starcraft to be quite helpful. I have also been watching Husky and day[9] videos. This is my favorite match so far. I find watching Starcraft matches quite entertaining, so I anticipate that I will continue watching matches even if I stop playing the game.

I play as Terran (username: omninox). I have started focusing on a single build order that seems to be doing quite well. I have been slowly rising in the bronze league - hopefully I will be promoted to silver soon. Most of my friends are way better than me, so I have been doing 1v1s with strangers. One player I know (a friend of a friend) is ranked at the top of the master league!

I made it to the last level of the campaign on hard difficulty. After a number of retries on the last level, I gave up and beat it on normal. I have also started playing two custom Starcraft games: starjeweled and desert strike. I have been using starjeweled as a way to relax between 1v1 rounds - I find 1v1 to be quite mentally exhausting. Desert strike is a great way to practice unit counter strategies. If you play Starcraft, send me a friend request and maybe we can play sometime.

I have spent a lot of time over the last week studying and practicing. I found the beginner guide at /r/starcraft to be quite helpful. I have also been watching Husky and day[9] videos. This is my favorite match so far. I find watching Starcraft matches quite entertaining, so I anticipate that I will continue watching matches even if I stop playing the game.

I play as Terran (username: omninox). I have started focusing on a single build order that seems to be doing quite well. I have been slowly rising in the bronze league - hopefully I will be promoted to silver soon. Most of my friends are way better than me, so I have been doing 1v1s with strangers. One player I know (a friend of a friend) is ranked at the top of the master league!

I made it to the last level of the campaign on hard difficulty. After a number of retries on the last level, I gave up and beat it on normal. I have also started playing two custom Starcraft games: starjeweled and desert strike. I have been using starjeweled as a way to relax between 1v1 rounds - I find 1v1 to be quite mentally exhausting. Desert strike is a great way to practice unit counter strategies. If you play Starcraft, send me a friend request and maybe we can play sometime.

Thursday, April 28, 2011

Image Compression Results

Here are some more results comparing JPEG at the maximum compression level to my image compression algorithm:

Original image:

JPEG (15.7KiB):

My algorithm (3.2KiB):

Original image:

JPEG (6.3KiB):

My algorithm (2.0KiB):

Original image:

JPEG (6.2KiB):

My algorithm (3.9KiB):

Original image:

JPEG (11.2KiB):

My algorithm (2.8KiB):

Original image:

JPEG (7.1KiB):

My algorithm (3.0KiB):

Original image:

JPEG (5.9KiB):

My algorithm (1.5KiB):

Original image:

JPEG (16.4KiB):

My algorithm (4.0KiB):

All of the above images are around 1MiB when uncompressed. In every case my algorithm resulted in a smaller file size and a (arguably) better looking image.

Original image:

JPEG (15.7KiB):

My algorithm (3.2KiB):

Original image:

JPEG (6.3KiB):

My algorithm (2.0KiB):

Original image:

JPEG (6.2KiB):

My algorithm (3.9KiB):

Original image:

JPEG (11.2KiB):

My algorithm (2.8KiB):

Original image:

JPEG (7.1KiB):

My algorithm (3.0KiB):

Original image:

JPEG (5.9KiB):

My algorithm (1.5KiB):

Original image:

JPEG (16.4KiB):

My algorithm (4.0KiB):

All of the above images are around 1MiB when uncompressed. In every case my algorithm resulted in a smaller file size and a (arguably) better looking image.

Wednesday, April 27, 2011

Lossy Image Compression

Last night I wrote a lossy image compression algorithm. It is based on an idea from this paper. First I trained a set of 256 6x6 color filters on the CIFAR-10 image dataset. To train the filters, I used the k-means algorithm on 400,000 randomly selected image patches. Here are the resulting filters:

I then used the filters to compress the following image:

I then used the filters to compress the following image:

Using the maximum compression level, JPEG compresses the image to 7.1KiB:

Using the maximum compression level, JPEG compresses the image to 7.1KiB:

My compression algorithm compresses the image to 5.6KiB:

My compression algorithm compresses the image to 5.6KiB:

Try clicking on the above images to see the high resolution versions. My compressed version of the image is smaller and looks better than JPEG.

Try clicking on the above images to see the high resolution versions. My compressed version of the image is smaller and looks better than JPEG.

I did the compression by doing a raster scan of the image and for each image patch I select the best filter. I then losslessly compress the filter selections using paq8l. The obvious improvement to this algorithm would be to use a combination of several filters for each image patch instead of selecting the best filter. Using several filters would take more space to encode but would result in a much better image approximation. Another idea I plan to try is to use this same algorithm for lossy video compression.

I then used the filters to compress the following image:

I then used the filters to compress the following image: Using the maximum compression level, JPEG compresses the image to 7.1KiB:

Using the maximum compression level, JPEG compresses the image to 7.1KiB: My compression algorithm compresses the image to 5.6KiB:

My compression algorithm compresses the image to 5.6KiB: Try clicking on the above images to see the high resolution versions. My compressed version of the image is smaller and looks better than JPEG.

Try clicking on the above images to see the high resolution versions. My compressed version of the image is smaller and looks better than JPEG.I did the compression by doing a raster scan of the image and for each image patch I select the best filter. I then losslessly compress the filter selections using paq8l. The obvious improvement to this algorithm would be to use a combination of several filters for each image patch instead of selecting the best filter. Using several filters would take more space to encode but would result in a much better image approximation. Another idea I plan to try is to use this same algorithm for lossy video compression.

Tuesday, April 26, 2011

The Sleeping Mind

I occasionally wake up from a dream and immediately realize how flawed my logic was. Although I rarely remember any of my dreams, I have definitely woken up and thought to myself "haha, my sleeping mind must be really stupid to have come up with that conclusion." However, I have encountered a few counterexamples which indicate that my sleeping mind can be productive.

When I am falling asleep I usually spend my time thinking about some challenging problem. My theory is that if I fall asleep while thinking about a problem, my sleeping mind might churn away during the night and make progress on the problem. Although this usually isn't the case, there have been a few instances when I wake up and I have the answer.

A few years ago I was stuck on a programming problem on a cpsc313 assignment. It was late at night, I wasn't making any progress, so I went to sleep. I woke up in the middle of the night and immediately knew how to solve the question. I was so excited that I spent a few minutes implementing the solution, verified that it worked, and then went back to sleep.

Last night I went to sleep trying to think of an idea for a project to implement on Google App Engine. This morning I was surprised to find that I had a complete project idea in mind (including the algorithms needed to implement it!). I don't particularly like the idea and I don't think I will implement it, but I was shocked to find that I could come up with an original idea with non-trivial algorithmic details while I was asleep.

The idea was to develop a website for people looking for recommended places to travel. The website would first ask a series of 5-10 binary questions. The questions would provide a brief description of two travel destinations. The user would then click on the destination they would prefer travelling to. After they complete the questions a ranked list of travel recommendations would be given to the user (the list would contain many more destinations than were asked in the questions). Using some basic machine learning, the travel recommendations would become more accurate as more people use the website. The answers to the questions serve two purposes: 1) to assign the user to a cluster of like-minded individuals in order to generate a ranked destination list and 2) to rank travel destinations for all users in that cluster. Although I don't particularly like this project idea, I do like the algorithm it uses. I can imagine that this same algorithm could be used for other project ideas (although I haven't thought of any good ones yet).

When I am falling asleep I usually spend my time thinking about some challenging problem. My theory is that if I fall asleep while thinking about a problem, my sleeping mind might churn away during the night and make progress on the problem. Although this usually isn't the case, there have been a few instances when I wake up and I have the answer.

A few years ago I was stuck on a programming problem on a cpsc313 assignment. It was late at night, I wasn't making any progress, so I went to sleep. I woke up in the middle of the night and immediately knew how to solve the question. I was so excited that I spent a few minutes implementing the solution, verified that it worked, and then went back to sleep.

Last night I went to sleep trying to think of an idea for a project to implement on Google App Engine. This morning I was surprised to find that I had a complete project idea in mind (including the algorithms needed to implement it!). I don't particularly like the idea and I don't think I will implement it, but I was shocked to find that I could come up with an original idea with non-trivial algorithmic details while I was asleep.

The idea was to develop a website for people looking for recommended places to travel. The website would first ask a series of 5-10 binary questions. The questions would provide a brief description of two travel destinations. The user would then click on the destination they would prefer travelling to. After they complete the questions a ranked list of travel recommendations would be given to the user (the list would contain many more destinations than were asked in the questions). Using some basic machine learning, the travel recommendations would become more accurate as more people use the website. The answers to the questions serve two purposes: 1) to assign the user to a cluster of like-minded individuals in order to generate a ranked destination list and 2) to rank travel destinations for all users in that cluster. Although I don't particularly like this project idea, I do like the algorithm it uses. I can imagine that this same algorithm could be used for other project ideas (although I haven't thought of any good ones yet).

Sunday, April 10, 2011

Sunday, March 27, 2011

RoboCup Iran Open

Next week I was planning to travel to Iran with the UBC RoboCup team to compete in the Iran Open. After the competition I was planning on doing some travelling in Thailand. Unfortunately, the trip got cancelled. Since UBC is one of the team sponsors, they have a policy which requires that we get permission to travel to DFAIT level 3 regions. The official response was that the faculty would not give approval for the trip (due to the current threat level and the fact that the trip is not academically essential). Since we had already purchased plane tickets, the cancellation cost the team quite a bit of money. In July our team will be travelling to Turkey to compete in RoboCup 2011.

Monday, March 21, 2011

html5cards.org

Today I purchased a domain name for the HTML5 card game website Simon and I have been working on: html5cards.org

Tuesday, March 15, 2011

HTML5 Card Games

Simon and I have been making steady progress on our multiplayer card game website. The project is currently hosted at http://html5cards.appspot.com. We have implemented one game so far (German Bridge) which should be mostly functional. Let us know if you see any bugs or have feedback.

Tuesday, March 08, 2011

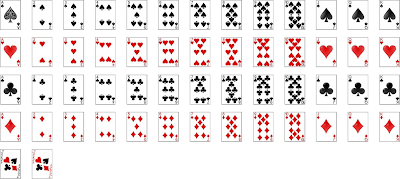

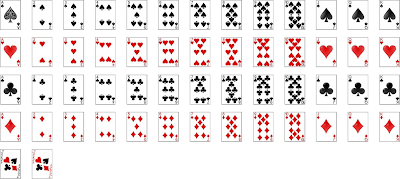

Face Cards

I have released a new version of my vector playing cards. It turns out the designs for the face cards are in the public domain. I compared several brands of cards and they use the exact same designs. I scanned the cards and vectorized them using potrace. After vectorizing them I did a lot of touch-up work using Inkscape.

I have released a new version of my vector playing cards. It turns out the designs for the face cards are in the public domain. I compared several brands of cards and they use the exact same designs. I scanned the cards and vectorized them using potrace. After vectorizing them I did a lot of touch-up work using Inkscape.

Sunday, March 06, 2011

Today I learned...

...that I am currently travelling at the speed of light. In fact, all objects in the universe are travelling at exactly the same speed. Although I am travelling slowly in the three spatial dimensions, I am travelling quickly in the time dimension. The combined speed of any object through the four spacetime dimensions is exactly the same. Light travels completely in the three spatial dimensions and doesn't travel at all through time. Photons never age. This also explains why we can't travel faster than the speed of light.

I am quite surprised I didn't learn this fact earlier in life. I somehow managed to make it through physics and astronomy classes learning about Einstein's relativity without ever making this simple connection!

I am quite surprised I didn't learn this fact earlier in life. I somehow managed to make it through physics and astronomy classes learning about Einstein's relativity without ever making this simple connection!

Friday, March 04, 2011

Vector Playing Cards

I have started working with Simon on a project to create a multiplayer card game website hosted by Google App Engine. For the website we need high quality images of each poker card. Ideally the images would be in a vector format so that we can scale them to any resolution. We found that there are not many options that don't have restrictive licenses (here is one exception).

Instead I decided to create a deck of vector graphics cards from scratch using Inkscape. I created most of the artwork myself except for the ace of spades:

I based this design on artwork by Suzanne Tyson. Since the source image was rasterized, I used the potrace algorithm to vectorize it.

I based this design on artwork by Suzanne Tyson. Since the source image was rasterized, I used the potrace algorithm to vectorize it.

I am releasing the images into the public domain. This means that they can be used for any purpose without any attribution (although attribution would be appreciated). I have created a Google Code project to host the SVG source code and also posted pictures of the cards to my Picasa account.

Instead I decided to create a deck of vector graphics cards from scratch using Inkscape. I created most of the artwork myself except for the ace of spades:

I based this design on artwork by Suzanne Tyson. Since the source image was rasterized, I used the potrace algorithm to vectorize it.

I based this design on artwork by Suzanne Tyson. Since the source image was rasterized, I used the potrace algorithm to vectorize it.I am releasing the images into the public domain. This means that they can be used for any purpose without any attribution (although attribution would be appreciated). I have created a Google Code project to host the SVG source code and also posted pictures of the cards to my Picasa account.

Monday, February 28, 2011

Friday, February 25, 2011

The Greatest Unsolved Problems

In 2000, the Clay Mathematics Institute published a list of seven unsolved problems in mathematics called the Millennium Prize Problems. There is a prize of US$1,000,000 for solving each problem. One of these problems has already been solved. In 1900, David Hilbert published a list of 23 unsolved problems in mathematics. Looking at the status of these problems on Wikipedia, only five of them remain unresolved.

Although there are many unsolved problems in science and mathematics, we have been making steady progress in solving open problems. Fermat's Last Theorem was conjectured in 1637 and was solved in 1995. The four color theorem was stated in 1852 and proven in 1976. Throughout human history we seem to be systematically progressing and accumulating scientific knowledge.

I have compiled a list of what I consider to be the five most important unsolved problems. Ranking the importance of problems is of course subjective. Biologists would probably be biased towards biology problems and physicists towards physics problems. I have tried to avoid being too biased towards computer science problems. Here is the list, ranked from most important to least:

1) Theory of Everything:

The Theory of Everything (TOE) is the most important unsolved problem in physics. As the name suggests, this theory would fully explain all known physical phenomena in the universe. The complexity of the universe does not necessarily mean that TOE needs to be complex. As observed in chaos theory and cellular automaton, a set of extremely simple rules can lead to incredible complexity. Albert Einstein spent the last few decades of his life searching for TOE (which he referred to as unified field theory). He failed.

The Theory of Everything (TOE) is the most important unsolved problem in physics. As the name suggests, this theory would fully explain all known physical phenomena in the universe. The complexity of the universe does not necessarily mean that TOE needs to be complex. As observed in chaos theory and cellular automaton, a set of extremely simple rules can lead to incredible complexity. Albert Einstein spent the last few decades of his life searching for TOE (which he referred to as unified field theory). He failed.

Einstein's general theory of relativity explains the universe at large scales. So far, all experimental evidence confirms general relativity. Quantum mechanics explains the universe at the scale of subatomic particles. Once again, all experimental evidence confirms quantum mechanics. Unfortunately, these two theories can not both be right. In extreme conditions like black holes and the Big Bang, these theories seem to contradict each other. String theory seems to be a promising candidate for a TOE. It resolves the tension between general relativity and quantum mechanics. However, string theory has yet been unable to produce testable experimental predictions.

2) Intelligence:

There are several definitions of intelligence. When comparing the intelligence of animals, most people agree that humans are more intelligent than dogs and dogs are more intelligent than goldfish. How can we quantify intelligence? Several tests have been created that attempt to measure and compare human intelligence (such as the IQ test). Some of these tests are based directly on pattern matching and prediction skills.

There are several definitions of intelligence. When comparing the intelligence of animals, most people agree that humans are more intelligent than dogs and dogs are more intelligent than goldfish. How can we quantify intelligence? Several tests have been created that attempt to measure and compare human intelligence (such as the IQ test). Some of these tests are based directly on pattern matching and prediction skills.

Based on my research in the field of data compression, I have my own definition of intelligence which is easily quantifiable. Intelligence can be measured by the cross entropy rate of a predictive compression algorithm on multidimensional sequence data. This basically means that an algorithm which is better at predicting temporal patterns in data is more intelligent. The human brain is extremely good at recognising and predicting patterns in the massively parallel sensory data it processes.

Most data compression algorithms work on one-dimensional sequence data. For example, text is a one-dimensional sequence of characters. Studies have been performed to try to measure the cross entropy rate of humans trying to predict the next character in a natural language text sequence: 0.6 to 1.3 bits per character. The best text compression algorithms (such as PAQ8) are starting to approach the upper end of this range. Unfortunately, compressing one-dimensional data is easy compared to high-dimensional data. In my opinion, any compression algorithm which could approach the predictive capability of the human brain on billions of parallel inputs would be truly intelligent. The most promising framework I have seen for explaining the algorithm behind human intelligence is hierarchical temporal memory.

If humans are so intelligent, what is the point of making intelligent machines? Well, if we could design an algorithm which becomes more intelligent just by giving it more computational resources, we could make it arbitrarily intelligent. If it could surpass human intelligence, there could be a singularity in which the AI can design a new AI which is even more intelligent than itself (ad infinitum). Achieving this would allow AI to solve the other four open problems on this list (assuming they are solvable!).

3) Dark Energy and Dark Matter:

Experiments indicate that the universe is expanding at an accelerated rate. This is surprising because mass in the universe should cause gravity to slow its expansion. Dark energy is currently the most accepted theory to explain the expansion. Not much is known about dark energy besides the fact that based on its effect on expansion, about 74% of the universe is dark energy.

Experiments indicate that the universe is expanding at an accelerated rate. This is surprising because mass in the universe should cause gravity to slow its expansion. Dark energy is currently the most accepted theory to explain the expansion. Not much is known about dark energy besides the fact that based on its effect on expansion, about 74% of the universe is dark energy.

So if 74% of the universe is made up of dark energy, you might assume the rest of it is the visible universe. Wrong. The visible universe only accounts for about 4%. The other 22% is known as dark matter. Once again, not very much is known about dark matter. Advances in our understanding of dark energy and dark matter would provide insight into the nature of our universe and its eventual fate.

4) One-way Functions:

Any computer scientists reading this post might be surprised by the fact that the P = NP problem is not on my list. As Scott Aaronson points out, solving the P = NP problem could have a huge impact:

Any computer scientists reading this post might be surprised by the fact that the P = NP problem is not on my list. As Scott Aaronson points out, solving the P = NP problem could have a huge impact:

The existence of one-way functions is another famous problem in computer science. The existence (or non-existence) of one-way functions would have a bigger impact than proving that P ≠ NP. In fact, the existence of one-way functions would in itself prove that P ≠ NP. If one-way functions do not exist then secure public key cryptography is impossible. Either outcome results in a useful result.

5) Abiogenesis:

Abiogenesis is the study of the origin of life on Earth. Having little chemistry/biology background, I can't give a very good comparison between different abiogenesis theories. However, I can appreciate the importance of understanding how life arose. Not only would it enhance our knowledge of life on Earth, it would assist in the search for extra terrestrial life.

Abiogenesis is the study of the origin of life on Earth. Having little chemistry/biology background, I can't give a very good comparison between different abiogenesis theories. However, I can appreciate the importance of understanding how life arose. Not only would it enhance our knowledge of life on Earth, it would assist in the search for extra terrestrial life.

Although there are many unsolved problems in science and mathematics, we have been making steady progress in solving open problems. Fermat's Last Theorem was conjectured in 1637 and was solved in 1995. The four color theorem was stated in 1852 and proven in 1976. Throughout human history we seem to be systematically progressing and accumulating scientific knowledge.

I have compiled a list of what I consider to be the five most important unsolved problems. Ranking the importance of problems is of course subjective. Biologists would probably be biased towards biology problems and physicists towards physics problems. I have tried to avoid being too biased towards computer science problems. Here is the list, ranked from most important to least:

1) Theory of Everything:

The Theory of Everything (TOE) is the most important unsolved problem in physics. As the name suggests, this theory would fully explain all known physical phenomena in the universe. The complexity of the universe does not necessarily mean that TOE needs to be complex. As observed in chaos theory and cellular automaton, a set of extremely simple rules can lead to incredible complexity. Albert Einstein spent the last few decades of his life searching for TOE (which he referred to as unified field theory). He failed.

The Theory of Everything (TOE) is the most important unsolved problem in physics. As the name suggests, this theory would fully explain all known physical phenomena in the universe. The complexity of the universe does not necessarily mean that TOE needs to be complex. As observed in chaos theory and cellular automaton, a set of extremely simple rules can lead to incredible complexity. Albert Einstein spent the last few decades of his life searching for TOE (which he referred to as unified field theory). He failed.Einstein's general theory of relativity explains the universe at large scales. So far, all experimental evidence confirms general relativity. Quantum mechanics explains the universe at the scale of subatomic particles. Once again, all experimental evidence confirms quantum mechanics. Unfortunately, these two theories can not both be right. In extreme conditions like black holes and the Big Bang, these theories seem to contradict each other. String theory seems to be a promising candidate for a TOE. It resolves the tension between general relativity and quantum mechanics. However, string theory has yet been unable to produce testable experimental predictions.

2) Intelligence:

There are several definitions of intelligence. When comparing the intelligence of animals, most people agree that humans are more intelligent than dogs and dogs are more intelligent than goldfish. How can we quantify intelligence? Several tests have been created that attempt to measure and compare human intelligence (such as the IQ test). Some of these tests are based directly on pattern matching and prediction skills.

There are several definitions of intelligence. When comparing the intelligence of animals, most people agree that humans are more intelligent than dogs and dogs are more intelligent than goldfish. How can we quantify intelligence? Several tests have been created that attempt to measure and compare human intelligence (such as the IQ test). Some of these tests are based directly on pattern matching and prediction skills.Based on my research in the field of data compression, I have my own definition of intelligence which is easily quantifiable. Intelligence can be measured by the cross entropy rate of a predictive compression algorithm on multidimensional sequence data. This basically means that an algorithm which is better at predicting temporal patterns in data is more intelligent. The human brain is extremely good at recognising and predicting patterns in the massively parallel sensory data it processes.

Most data compression algorithms work on one-dimensional sequence data. For example, text is a one-dimensional sequence of characters. Studies have been performed to try to measure the cross entropy rate of humans trying to predict the next character in a natural language text sequence: 0.6 to 1.3 bits per character. The best text compression algorithms (such as PAQ8) are starting to approach the upper end of this range. Unfortunately, compressing one-dimensional data is easy compared to high-dimensional data. In my opinion, any compression algorithm which could approach the predictive capability of the human brain on billions of parallel inputs would be truly intelligent. The most promising framework I have seen for explaining the algorithm behind human intelligence is hierarchical temporal memory.

If humans are so intelligent, what is the point of making intelligent machines? Well, if we could design an algorithm which becomes more intelligent just by giving it more computational resources, we could make it arbitrarily intelligent. If it could surpass human intelligence, there could be a singularity in which the AI can design a new AI which is even more intelligent than itself (ad infinitum). Achieving this would allow AI to solve the other four open problems on this list (assuming they are solvable!).

3) Dark Energy and Dark Matter:

Experiments indicate that the universe is expanding at an accelerated rate. This is surprising because mass in the universe should cause gravity to slow its expansion. Dark energy is currently the most accepted theory to explain the expansion. Not much is known about dark energy besides the fact that based on its effect on expansion, about 74% of the universe is dark energy.

Experiments indicate that the universe is expanding at an accelerated rate. This is surprising because mass in the universe should cause gravity to slow its expansion. Dark energy is currently the most accepted theory to explain the expansion. Not much is known about dark energy besides the fact that based on its effect on expansion, about 74% of the universe is dark energy.So if 74% of the universe is made up of dark energy, you might assume the rest of it is the visible universe. Wrong. The visible universe only accounts for about 4%. The other 22% is known as dark matter. Once again, not very much is known about dark matter. Advances in our understanding of dark energy and dark matter would provide insight into the nature of our universe and its eventual fate.

4) One-way Functions:

Any computer scientists reading this post might be surprised by the fact that the P = NP problem is not on my list. As Scott Aaronson points out, solving the P = NP problem could have a huge impact:

Any computer scientists reading this post might be surprised by the fact that the P = NP problem is not on my list. As Scott Aaronson points out, solving the P = NP problem could have a huge impact:If P = NP, then the world would be a profoundly different place than we usually assume it to be. There would be no special value in "creative leaps," no fundamental gap between solving a problem and recognizing the solution once it's found. Everyone who could appreciate a symphony would be Mozart; everyone who could follow a step-by-step argument would be Gauss...The problem is that most people assume that P ≠ NP. Although a proof of this would have some theoretical value, it would not have a large impact. This is why the problem got bumped off my list.

The existence of one-way functions is another famous problem in computer science. The existence (or non-existence) of one-way functions would have a bigger impact than proving that P ≠ NP. In fact, the existence of one-way functions would in itself prove that P ≠ NP. If one-way functions do not exist then secure public key cryptography is impossible. Either outcome results in a useful result.

5) Abiogenesis:

Abiogenesis is the study of the origin of life on Earth. Having little chemistry/biology background, I can't give a very good comparison between different abiogenesis theories. However, I can appreciate the importance of understanding how life arose. Not only would it enhance our knowledge of life on Earth, it would assist in the search for extra terrestrial life.

Abiogenesis is the study of the origin of life on Earth. Having little chemistry/biology background, I can't give a very good comparison between different abiogenesis theories. However, I can appreciate the importance of understanding how life arose. Not only would it enhance our knowledge of life on Earth, it would assist in the search for extra terrestrial life.

Wednesday, January 12, 2011

Machine Learning Contests

I just found another machine learning contest site called TunedIT. TunedIT seems to be very similar to Kaggle. On first inspection one of the TunedIT contests seemed to be very interesting - categorizing the genre and instruments in music. My current area of research involves one dimensional time-series data, so I thought audio would be an interesting domain to work with. Unfortunately, instead of providing the raw audio data, the contest organizers decided to process the audio and provide feature vectors instead. No longer being a one-dimensional temporal problem, the contest has lost much of its appeal.

I have been treating the traffic prediction contest on Kaggle as a one-dimensional temporal prediction problem (considering every road segment as a completely independent problem). I am currently ranked 8th out of 214 teams. I am guessing that my ranking won't improve before the contest end because I don't have any new ideas on how to increase my score. Most of my submissions so far have been used for parameter tuning. However, my parameter tuning efforts seem to have reached a local optimum.

I have been treating the traffic prediction contest on Kaggle as a one-dimensional temporal prediction problem (considering every road segment as a completely independent problem). I am currently ranked 8th out of 214 teams. I am guessing that my ranking won't improve before the contest end because I don't have any new ideas on how to increase my score. Most of my submissions so far have been used for parameter tuning. However, my parameter tuning efforts seem to have reached a local optimum.

Tuesday, January 11, 2011

Ambigram Failure

Two people who don't know my name tried to decrypt my ambigram. Their responses were "liynh knoll" and "liymr uwnq". :(

Wednesday, January 05, 2011

Subscribe to:

Posts (Atom)